In today's era of hybrid data ecosystems, organizations often find themselves straddling multiple data platforms for optimal performance and functionality. If your organization utilizes both Snowflake and Microsoft data stack, you might encounter the need to seamlessly transfer data from Snowflake Data Warehouse to Azure Lakehouse.

Fear not! This blog post will walk you through the detailed step-by-step process of achieving this data integration seamlessly.

As an example, the below Fig:1 shows a Customer table in the Snowflake Data warehouse.

To get this data from Snowflake to Azure Data Lakehouse we can

use cloud ETL tool like Azure Data Factory (ADF), Azure Synapse Analytics or

Microsoft Fabric. For this blog post, I have used Azure Synapse Analytics to

extract the data from Snowflakes. There are two main activities involved from

Azure Synapse Analytics:

A. Creating Linked Service

B. Creating a data pipeline with Copy activity

Activity A: Creating Linked service

In the Azure Synapse Analytics ETL tool you need to create a Linked Service

(LS), this makes sure connectivity between Snowflake and Azure Synapse

Analytics.

Please find the steps to create Linked Service:

Step 1) Azure Synapse got built in connector for Snowflake, Please click new Linked service and search the connector "Snowflake" and click next as shown in Fig 2 below

b) Linked service description: Provide the description of the Linked service.

c) Integration runtime: Integration runtime is required for Linked service, Integration Runtime (IR) is the compute infrastructure used by Azure Data Factory and Azure Synapse pipelines. You will find more information under Microsoft learn page.

d) Account name: This is the full name of your Snowflake account, to find this information in Snowflake you need to go to Admin->Accounts and find out the LOCATOR as shown in figure 4.

If you hover over the LOCATOR information, you will find the URL as shown in fig 5.

Please don't use full URL for the Account name in Linked Service, keep until https://hj46643.canada-central.azure

e) Database: Please find the database name from Snowflake, go to Databases->{choose the right Database} as shown in Fig 6

f) Warehouse: In Snowflake, you have warehouse in addition to the database. Please go to Admin->Warehouses->{choose your warehouse} as shown in fig 7. We have used the warehouse: AZUREFABRICDEMO

g) User name: User name of your Snowflake account

h) Password: Password of your Snowflake account

i) Role: Default role is PUBLIC, if you don't use any other role it will pick PUBLIC. I did not put any specific role so kept this field empty.

j) Test connection: Now you can test the connection before you save it.

k) Apply: If the earlier step "Test connection" is successful, please save the Linked service by clicking apply button.

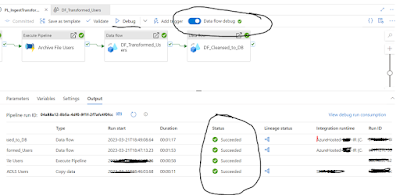

B. Creating a data pipeline with Copy activity

This activity includes connecting the source Snowflake and copying the data to the destination Azure data Lakehouse. The activity includes following steps:

1. Synapse Data pipeline

2. Source side of the Copy activity needs to connect with the Snowflake

3. Sink side of the Copy activity needs to connect with the Azure Data Lakehouse

1. Synapse Data pipeline

From the Azure Synapse Analytics, create a pipeline as shown Fig 8

And then drag and drop Copy activity from the canvas as shown in Fig 9, You will find Copy activity got source and sink side.

2. Source side of the Copy activity needs to connect with the Snowflake

Source side of the Copy activity needs to connect with the Snowflake Linked service that we created under the Activity A: Creating Linked service. Please find how you connect Snowflake from Synapse pipeline, at first choose "Source" and then click "New" as shown in below fig 10

3. Sink side of the Copy activity needs to connect with the Azure Data Lakehouse

Now we need to connect the sink side of the copy activity, fig 13 shows how to start with sink dataset.