It's all about Data

What I am passionate about!

Sunday, March 1, 2026

Vibe coding with Databricks: Step by step guide to setup your environment

Sunday, January 11, 2026

Agentic AI: How Not to Become Part of the 80% Failure Side!

Many research reports cite that 80%–85% of GenAI pilots fail, and Gartner predicts that over 40% of Agentic AI projects will be canceled by the end of 2027 [1].

Organizations have started building AI solutions — especially AI agents — to serve specific purposes, and in many cases, multiple agents are being developed. However, these solutions are often built in silos. While they may work well in proof-of-concept (PoC) stages, they struggle when pushed into production.

The biggest question that emerges on a scale is trust.

Trust First: Security, Governance, and Cost Control

Before scaling AI, we must make it safe, compliant, and with predictable cost. It means:

- PII and sensitive data never leaves our controlled boundary

- Every query is auditable and attributable

- Cost per interaction is enforced by design, not monitored after the bill arrives

Why this matters:

Without trust, AI adoption stops at the first incident. To gain trust we need to avoid hallucination, confabulation and we can make some action to monitor and govern. I was working with Databricks to monitor and govern the agent. With step by step process I will share how you can setup AI governance in Databricks.

AI Governance with Databricks

Once you deploy your model as a serving endpoint, you can configure the Databricks AI Gateway, which includes:

• Input and Output guardrails

• Usage monitoring

• Rate limiting

• Inference tables

This setup is illustrated in Figure 1.

AI Guardrails: Filter input & output to prevent unwanted data, such as personal data or unsafe content. Figure 2 demonstrates how guardrails protect both input and output.

Inference tables: Inference tables track the data sent to and returned from models, providing transparency and auditability over model interactions. Refer to Figure 3 for inference table, where you can assign the inference table.

Rate Limiting: Enforce request rate limits to manage traffic for the endpoint. As shown in fig 4, you can limit number queries and number of token for the particular serving end point.

In above Fig 4, QPM stands for number of queries the end point can process per minute and TPM stands for number of tokens the endpoint can process per minute

Usage Tracking: Databricks provides built-in usage tracking so you can monitor:

• Who is calling the APIs or model endpoints

• How frequently they are used

Usage data is available in the System table: system.serving.endpoint_usage

In summary, Databricks offers the tools needed to build trusted and governed Agentic AI solutions, helping you land on the 20% success side of AI adoption.

MIT report: 95% of generative AI pilots at companies are failing | Fortune

Saturday, January 25, 2025

Why Data Strategy Is More Crucial Than Ever for Your Enterprise

From executives to frontline teams, everyone in the

organization is talking about GenAI applications. Now, a new buzzword is

gaining traction — “Autonomous

Agents.” In the near future, organizations won’t just have a handful of

these agents; they will likely manage hundreds, if not thousands. As their

presence grows, so will the challenges of ensuring security, compliance, and

governance. Effectively managing this new wave of AI-driven automation will be

critical to maintaining trust, control, and operational efficiency.

The data products we build in any organization rely on data.

And when we talk about data, it must be fresh, reliable, secure, and

compliant at any given point in time. Your organization can invest heavily

in building data products, but if the data itself is unreliable, insecure, or

non-compliant, these products will fail to deliver value to the business.

A well-defined data strategy plays a critical role in

ensuring that your organization delivers fresh, reliable, secure, and compliant

data. It establishes governance frameworks, data quality measures, and security

policies that safeguard the integrity of data assets. A strong data strategy

ensures that AI agents and other data-driven applications operate with

trustworthy information.

In my next blog post, I’ll be sharing the foundational

building blocks of a successful Data Strategy — practical

insights you can apply to your own organization. I’d also

love to hear from my network: If you’ve already

implemented a modern data strategy, what lessons have you learned? Any tips or

best practices you’d like to share? Let’s learn from each other!

Saturday, March 23, 2024

Bridging Snowflake and Azure Data Stack: A Step-by-Step Guide

In today's era of hybrid data ecosystems, organizations often find themselves straddling multiple data platforms for optimal performance and functionality. If your organization utilizes both Snowflake and Microsoft data stack, you might encounter the need to seamlessly transfer data from Snowflake Data Warehouse to Azure Lakehouse.

Fear not! This blog post will walk you through the detailed step-by-step process of achieving this data integration seamlessly.

As an example, the below Fig:1 shows a Customer table in the Snowflake Data warehouse.

To get this data from Snowflake to Azure Data Lakehouse we can

use cloud ETL tool like Azure Data Factory (ADF), Azure Synapse Analytics or

Microsoft Fabric. For this blog post, I have used Azure Synapse Analytics to

extract the data from Snowflakes. There are two main activities involved from

Azure Synapse Analytics:

A. Creating Linked Service

B. Creating a data pipeline with Copy activity

Activity A: Creating Linked service

In the Azure Synapse Analytics ETL tool you need to create a Linked Service

(LS), this makes sure connectivity between Snowflake and Azure Synapse

Analytics.

Please find the steps to create Linked Service:

Step 1) Azure Synapse got built in connector for Snowflake, Please click new Linked service and search the connector "Snowflake" and click next as shown in Fig 2 below

b) Linked service description: Provide the description of the Linked service.

c) Integration runtime: Integration runtime is required for Linked service, Integration Runtime (IR) is the compute infrastructure used by Azure Data Factory and Azure Synapse pipelines. You will find more information under Microsoft learn page.

d) Account name: This is the full name of your Snowflake account, to find this information in Snowflake you need to go to Admin->Accounts and find out the LOCATOR as shown in figure 4.

If you hover over the LOCATOR information, you will find the URL as shown in fig 5.

Please don't use full URL for the Account name in Linked Service, keep until https://hj46643.canada-central.azure

e) Database: Please find the database name from Snowflake, go to Databases->{choose the right Database} as shown in Fig 6

f) Warehouse: In Snowflake, you have warehouse in addition to the database. Please go to Admin->Warehouses->{choose your warehouse} as shown in fig 7. We have used the warehouse: AZUREFABRICDEMO

g) User name: User name of your Snowflake account

h) Password: Password of your Snowflake account

i) Role: Default role is PUBLIC, if you don't use any other role it will pick PUBLIC. I did not put any specific role so kept this field empty.

j) Test connection: Now you can test the connection before you save it.

k) Apply: If the earlier step "Test connection" is successful, please save the Linked service by clicking apply button.

B. Creating a data pipeline with Copy activity

This activity includes connecting the source Snowflake and copying the data to the destination Azure data Lakehouse. The activity includes following steps:

1. Synapse Data pipeline

2. Source side of the Copy activity needs to connect with the Snowflake

3. Sink side of the Copy activity needs to connect with the Azure Data Lakehouse

1. Synapse Data pipeline

From the Azure Synapse Analytics, create a pipeline as shown Fig 8

And then drag and drop Copy activity from the canvas as shown in Fig 9, You will find Copy activity got source and sink side.

2. Source side of the Copy activity needs to connect with the Snowflake

Source side of the Copy activity needs to connect with the Snowflake Linked service that we created under the Activity A: Creating Linked service. Please find how you connect Snowflake from Synapse pipeline, at first choose "Source" and then click "New" as shown in below fig 10

3. Sink side of the Copy activity needs to connect with the Azure Data Lakehouse

Now we need to connect the sink side of the copy activity, fig 13 shows how to start with sink dataset.

Wednesday, November 29, 2023

How to solve available workspace capacity exceeded error (Livy session) in Azure Synapse Analytics?

Livy session errors in Azure Synapse with Notebook

If you are working with Notebook in Azure Synapse Analytics and multiple people are using the same Spark cluster at the same time then chances are high that you have seen any of these below errors:

"Failed to create Livy session for executing notebook. Error: Your pool's capacity (3200 vcores) exceeds your workspace's total vcore quota (50 vcores). Try reducing the pool capacity or increasing your workspace's vcore quota. HTTP status code: 400."

"InvalidHttpRequestToLivy: Your Spark job requested 56 vcores. However, the workspace has a 50 core limit. Try reducing the numbers of vcores requested or increasing your vcore quota. Quota can be increased using Azure Support request"

The below figure:1 shows one of the error while running the Synapse notebook.

What is Livy?

Will it fix if you increase vCores?

By looking at the error message you may attempt to increase the vCores; however, increasing the vCores will not solve your problem.

Let's look into how Spark pool works, by definition of Spark pool; when Spark pool instantiated it's create a Spark instance that process the data. Spark instances are created when you connect to a Spark pool, create a session and run the job. And multiple users may have access to a single Spark pool. When multiple users are running a job at the same time then it may happen that the first job already used most vCores so the another job executed by other user will find the Livy session error .

How to solve it?

Saturday, July 1, 2023

Provisioning Microsoft Fabric: A Step-by-Step Guide for Your Organization

Before we look into the Fabric Capacity, let's get into different licensing models. There are three different types of licenses you can choose from to start working with Microsoft Fabric:

1. Microsoft Fabric trial

It's free for two months and you need to use your organization email. Personal email doesn't work. Please find how you can activate your free trial

2. Power BI Premium Per Capacity (P SKUs)

If you already have a Power BI premium license you can work with Microsoft Fabric.

3. Microsoft Fabric Capacity (F SKUs)

With your organizational Azure subscription, you can create Microsoft Fabric capacity. You will find more details about Microsoft Fabric licenses

We will go through the steps in detail on how Microsoft Fabric capacity can be provisioned from Azure Portal.

Step 1: Please login to the Azure Portal and find Microsoft Fabric from your organization's Azure Portal as shown below in Fig 1.

Step 2: To create the Fabric capacity, the very first step is to create a resource group as shown in Fig 2

Step 3: To create the right capacity you need to choose the resource size that you require. e.g. I have chosen F8 as shown in the below figure 3

You will find more capacity details in this Microsft blog post.

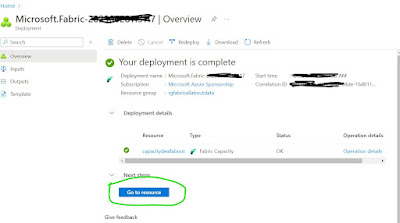

Step 4: As shown below in Fig 4, before creating the capacity please review all the information you have provided including resource group, region, capacity size, etc., and then hit the create button.

When it's done you will able to see Microsoft Fabric Capacity is created (see below fig 5)