You can have a situation where your SSAS database ID and Name can be miss matched. If you try to manually change the Database Name but ID still the same; this could happen when you move solution from development to Test and then change the name but still ID did not change.

What could possibly happen if the ID and Name are not same:

1) If you deploy SSAS package via Visual Studio it will look like processing but actually you will not find updated processed cube. (you can look at the last processed property of the database)

2) If you have job agent and then try to run the job it will show like it's working but actually there will be no update of the cube. You can look at the last processed property timestamp.

So, it means your SSAS package will not be updated any more.

After the deployment of your SSAS Package your SSAS database look like below; where you have Tabular and OLAP cube.

|

| Fig 1: Analysis Server Database |

If you look at the tabular database closely and see the property where name and Id are miss matched.

|

| Fig 2: Name and Id miss match for the tabular cube |

What is the fix?

At first, you need to create script of the database as like below, which will generate .XMLA file:

|

| Fig 3: Create script of the Tabular database |

Secondly, You need to change the ID and Name from .xmla script and press 'F5' to execute the change. Now if you look into the databse you will find the ID and Name are completely matched.

|

| Fig 4: ID and Name is updated |

Now you will be able to deploy your package with new database name but with same metadata.

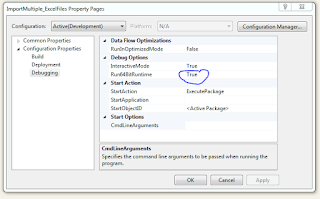

|

| Fig 5: Deploy package with new database Name |

You may not be happy at this point, since you wanted to have your original name back, well, it's simple just open SQL Server Data Tools and from the solution property change your Database name as like above screenshot (Fig 5), you can put your original Database name and then deploy; you are all set now. You may want to delete other database that you created, no worries; go ahead and delete the old one.